DALL-E is a version of GPT-3 trained to produce images from text descriptions using a dataset of billions of text-image pairs.

Understanding DALL-E

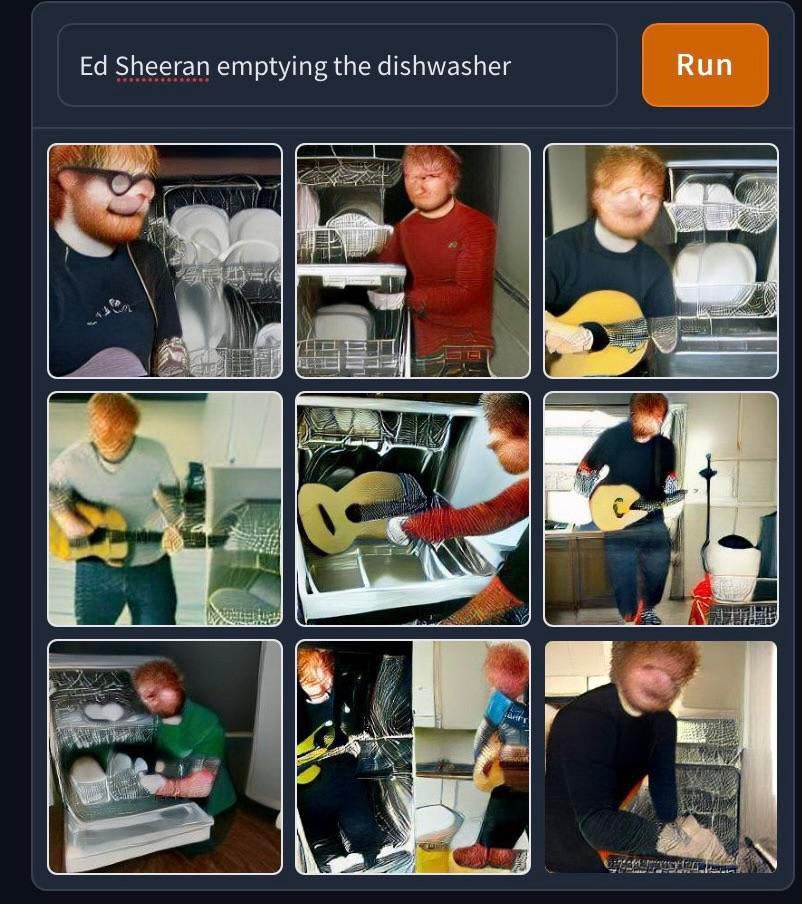

DALL-E – the artificial intelligence model developed by OpenAI, which translates natural language into images – thinks that Ed Sheeran and his guitar are indistinguishable beings.

While this might sound deep, in reality, it’s quite idiotic, and it shows some of the drawbacks (for now) of these models.

A meme account on Twitter, called Weird Dall-E Mini Generations posted the above, and I had a great laugh.

If you check the image in the middle, the prompt (the text that tells the machine what image to produce) says “Ed Sheeran emptying the dishwasher” there is no mention of a guitar.

Yet the interesting thing is the machine learning model, in almost all the images represent Ed Sheeran with a guitar, at the point in which, in the middle, he’s dishwashing the guitar

I know it sounds stupid but those sorts of insights tell us how the machine “represents” something.

In this case, the machine seems to be representing Ed Sheeran and his guitar as indistinguishable things.

According to developer OpenAI, DALL-E is an AI system “that can create realistic images and art from a description in natural language.”

The model can produce images that are realistic and original and can combine various styles, concepts, and attributes. DALL-E can also be used to make realistic edits to existing images.

For instance, it can add or remove elements from a scene while compensating for differences in shadows, textures, and reflections.

The technology is not available to the general public at present with OpenAI previewing DALL-E to trusted friends and family of its employees.

In May 2022, however, the company began adding 1,000 new users from its lengthy waitlist each week.

One publicly available solution is DALL-E mini, a popular open-source version released by an assortment of developers that is often overloaded with user demand.

How does DALL-E work?DALL-E uses a process OpenAI calls “diffusion” to understand the relationship between an image and the text that describes it.

Essentially, the process starts with a pattern of random dots that transform into an image when the model recognizes specific aspects of said image.

DALL-E is a multimodal form of the GPT-3 language model with 12 billion parameters trained on text-image pairs from the internet.

In response to prompts, DALL-E generates multiple images which are then ranked by CLIP – a neural network and image processor trained on over 400 million image-text pairs.

Note that CLIP associates images with captions scraped from the internet as opposed to labeled images from a curated dataset.

From a random selection of 32,768 captions, CLIP can predict which caption is most appropriate for a specific image by learning to link objects with their names and descriptors.

DALL-E then uses this information to draw or create images based on a short, natural-language caption.

When the caption “a painting of capybara sitting in a field at sunrise” was fed into the model, it produced various pictures of capybaras in all shapes and sizes with yellow and orange backgrounds.

The caption “avocado armchair” also produced images that combined both objects in novel ways to produce comfortable seating.

Implications of the DALL-E modelOpenAI is aware that the DALL-E model could be exploited for nefarious purposes and this is one reason why it is not currently available in their API.

Having said that, the company has nonetheless developed a range of “safety mitigations” including:

- Curbing misuse – DALL-E’s content policy prohibits users from creating adult, politically motivated, or violent content. Filters are in place to identify specific text prompts and uploads that may violate the company’s terms of service.

- Phased deployment based on learning – a select number of trusted users are helping OpenAI learn about the capabilities and limitations of DALL-E 2. This is an enhanced version of the original model that offers higher resolution images and is better at caption matching and photorealism. The company plans to invite more people to use the model as iterative improvements are made.

- Preventing harmful generations – misuse has also been curbed by pre-emptively removing explicit content from the training data. Advanced technology has also been used to prevent the generation of photorealistic images of notable public figures and the population more generally.

- DALL-E is a version of GPT-3 trained to produce images from text descriptions using a dataset of billions of text-image pairs.

- DALL-E is a multimodal form of the GPT-3 language model with 12 billion parameters that are trained on text-image pairs from the internet. Over 400 million such pairs are contained within the CLIP model which can predict which caption is the most likely descriptor of a given image.

- The potential to exploit the DALL-E model for nefarious purposes means OpenAI is taking a measured, iterative approach to its release. It has also instituted filters and pre-emptively removed explicit material from the training data to reduce instances of misuse.

Read Next: AI Chips, AI Business Models, Enterprise AI, How Much Is The AI Industry Worth?, AI Economy.

Additional resources:

- Business Models

- Business Strategy

- Distribution Channels

- Go-To-Market Strategy

- Marketing Strategy

- Market Segmentation

- Niche Marketing

The post What is DALL-E? appeared first on FourWeekMBA.

* This article was originally published here

Comments

Post a Comment